When is a Trend a Liability?

With Amplify, Outreach gave teams the power to harness machine learning to A/B test their messaging and hone in on what works when trying to connect with buyers. But what happens when the test results start trending negatively--how long should you wait before ending the experiment? How do you know if the trend will stay negative, or is there a chance that the trend will start an uptick toward positive results? How should you balance the need for statistical significance with business significance? Let’s find out.

Business Impact of Negative Trends

A negative trending experiment is one where the results from the treatment - the new idea you are trying such as a new email template, appear more negative compared to the control - the old template, but not in a statistically significant way.

For instance, let’s say you wanted to test whether or not an email greeting that addresses someone by their first name performs better or worse than an email that addresses someone more formally with prefixes like Mr. or Mrs - the current standard used in your templates.

After setting up and starting your A/B test, let’s say that you start seeing fewer opens and replies in the test group that addresses the prospect with Mr./Mrs.

This drop in open rates and reply rates are what we call a negative trend.

Naturally, you feel worried and wonder if you should shut this experiment down and declare it a failure before losing more replies. However, you have also heard us and others saying that if the results are not statistically significant they may be due to chance.

So what should you do? Stop the experiment or wait longer?

Recommendations for When to End an Experiment

To start, it’s important to have clearly defined criteria for shutting down the experiment. This is to avoid statistical issues--details that we will discuss in a future post--and to ensure that such criteria are clearly defined before the start of the experiment and then adhered to during the experiment.

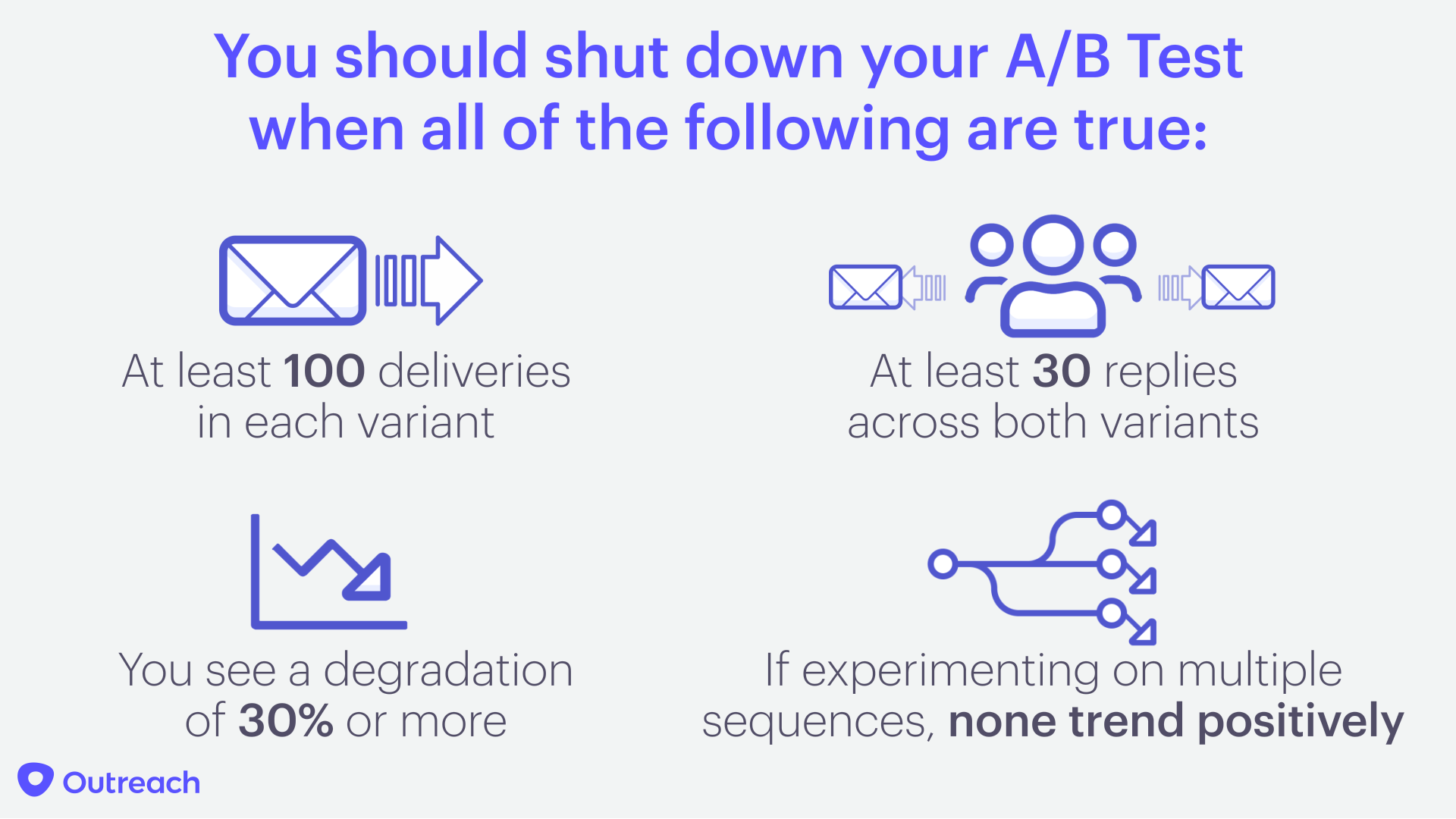

At Outreach, we recommend shutting down an experiment when all four of the following conditions are true:

- (a) we have at least 100 deliveries in each variant

- (b) we have at least 30 replies combined across both variants

- (c) we see a degradation of 30% or more in replies

- (d) additionally, if the experiment is running on multiple sequences, none of them show a positive trend

These criteria attempt to formally capture the point in time when the business significance of the degradation--or impact on your prospects--becomes big enough to override the desire to learn what the true impact of the treatment is. In other words, the high “cost of learning” and the potential impact on our prospects and deals does not justify continuing the experiment anymore.

Additionally, even if your experiment meets the above criteria but there’s not enough data to determine if the negative trend is real, we can still be fairly certain it's not going to do a 180 degree turn and become a positive result either.

Conclusion

A/B testing provides a data driven way to validate your ideas, separating the good from the bad, and enabling continuous iterative improvement in revenue efficiency.

While doing this, it is important to minimize the harm from bad ideas, detecting and discarding them quickly.

We hope this advice will give you a better idea of how to test your ideas while protecting your prospects by understanding when to call it quits.